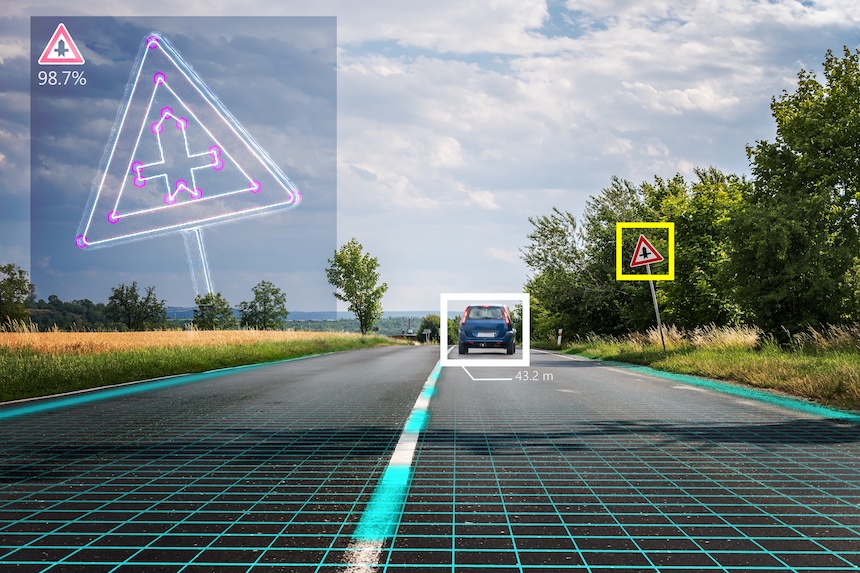

Computer vision is one of the fastest-growing areas in artificial intelligence (AI) today. On the other hand, Convolutional Neural Networks, shortly known as CNN is one of the most significant contributors to the massive progress made in Computer Vision field and research. Most people, nowadays, may find it easy to learn CNN functions in a more cursory way. You only need to pile a heap of convolutions into several layers, introduce some pooling, add in a softmax towards the end, thus creating a Computer Vision. If you want to learn more about CNN and its functions, you can read more helpful hints and understand further.

Convolutions

The operation of a convolution neural network is its most crucial part, so how you’ll utilize CNN affect how your network performs. However, you won’t see a significant advantage in using a convolution that’s more than 3×3. New research has also found that squeezing together 3×3 complications will give you a more or less identical receptive field (the region of input space that the CNN is looking at) to bigger convolutions.

You could also use 1×1 convolution at some points in your network. This effectively reduces the dimensions and measurements of the feature maps you want to analyze before it’s processed alongside a 3×3 convolution. Instead of processing a massive feature map that’s 256-deep, you could get all the necessary information by converting it into less massive feature maps.

Once the compression is completed, you could then apply your 3×3 convolution. Following this process will lead to a faster processing time because it’s being used on 64 rather than 256 feature maps. This strategy is also known to achieve more or less the same results as the traditional way of 3×3 stacking. In the conclusion of the process, you can revert to the original 256-deep feature map by utilizing another 1×1 convolution.

When it comes to activations, they’re usually connected to the convolution in code. One of the identified ways to achieve this is by using ReLU, which often does a better job without involving complicated finetuning than other options.

Although there’s a much-heated debate about the best activation type, there’s not much difference—they differ only by a small margin. If you already know what kind of design works easily with ReLU, you can navigate with the other activation types and adjust their parameters.

Pooling

Most convolutional neural networks can use a feature known as pooling. By pooling all of the data presented in a network, you’ll be able to summarize them and discard all of the information that’s not needed for the task you want to complete (also known as downsampling). As you add more layers or end a particular ‘stage,’ your network’s ability to keep crucial spatial information would be reduced. So, pooling is essential, especially if you want to add more layers of convolutions.

Although the community is still divided on which one is the best for pooling, the experience if you have the chance to use both is almost negligible. To maintain the best features, most people use max-pooling (selecting the maximum element to enable the filter to cover most of the feature map) throughout their network. But they also use average pooling (focuses on the average value of a part of a feature map) towards the end of the process.

While average pooling works well on smoother borders, the use of average pooling will get an excellent final vector representation of all their features since max pooling is more effective for edges. Most people do this before adding the last, most dense layer. This is also the final step before the network goes through softmax (normalizes the turnout of a network based on probabilities predicted from different output classes), becoming a fully-functioning neural network after passing this stage.

Network Depth and Structure

You don’t need to worry about the exchange between accuracy, network depth, and speed, as they’re often quite simple. The more layers you add, the network’s accuracy also increases. However, it will turn your network slower to respond owing it to the increase in processing time. You can usually finetune the depth to find the perfect spot for your network. Also, after some practice, you’ll have a good eye for estimating it too.

But something you need to know is the relationship between speed and accuracy. If you add more layers, it might affect the precision that’s given by each layer.

In terms of the structure of your network, you should use standard benchmarks and MobileNet-v2 and Depthwise-Separable convolution blocks for added speed. The former could benefit from running more efficiently using the CPU and optimize it to get almost real-time results. Running your network through the CPU is superior compared to relying on the GPU’s performance. It could make operating your network cheaper since the latter is more expensive than the former.

Making the whole process cheaper is a way researchers predict will make computer vision and artificial intelligence more pervasive in our everyday lives. It will also make creating faster and more accurate CNNs a lot more cost-effective.

Final Thoughts

A convolutional neural network is a deep learning strategy that’s gaining more popularity to make visual recognition more effective. Like other deep learning techniques, it’s also heavily reliant on your training data’s quality and size. But given a well-rounded dataset, it’ll be much more effective in visual recognition compared to humans.

Yet, these benefits could come at a massive toll on your processing power, especially if the network is unoptimized. You need to employ strategies like using 1×1 convolutions and pooling to make it run more efficiently.